How Amazon S3 Really Works: Designing Storage for Internet Scale

How Amazon S3 Really Works: Designing Storage for Internet Scale

Short description

Amazon S3 looks deceptively simple: put an object, get an object. Behind that simplicity is one of the most sophisticated distributed storage systems ever built.

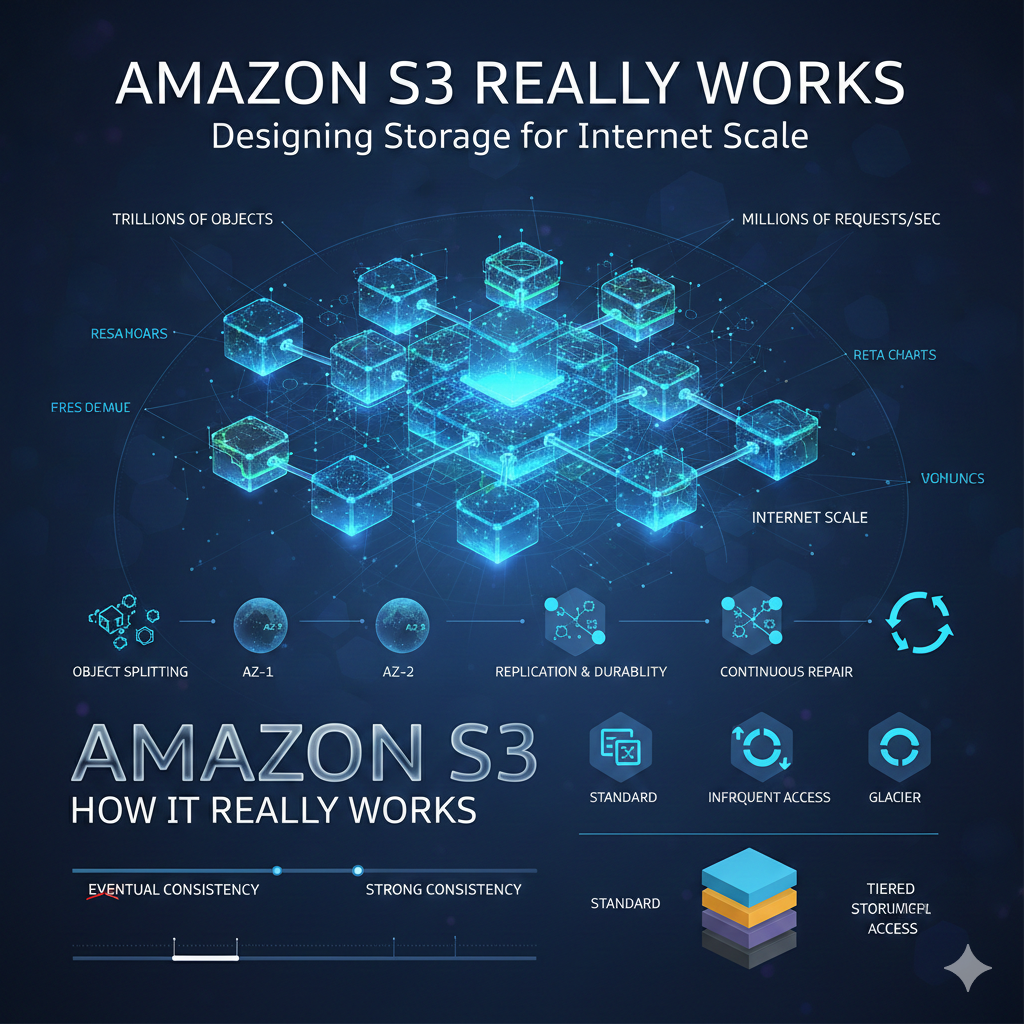

In this post, I’ll break down how S3 works from a system design perspective—how it stores trillions of objects, delivers extreme durability, scales automatically, and stays fast under massive global load.

Why Object Storage Is a System Design Problem

S3 is not a file system and not a database. It is object storage, optimized for scale, durability, and availability rather than low-latency random writes.

Designing such a system means solving problems at an entirely different order of magnitude.

Trillions of objects

Exabytes of data

Millions of requests per second

Traditional storage assumptions break at this scale. S3 exists because distributed systems need storage that grows without coordination bottlenecks.

The Core Abstraction: Buckets and Objects

S3 exposes only two primary abstractions:

Bucket: a globally unique namespace

Object: immutable data + metadata

There are no directories—only prefixes. This design choice removes the need for hierarchical locking or directory-level metadata coordination.

Every object is addressed by a key, and the system treats keys as opaque identifiers.

High-Level Architecture: Cells, Not a Monolith

S3 is built as a collection of independent cells, each responsible for a subset of buckets.

No global metadata server

Failure isolation by design

Horizontal scalability

This cell-based architecture ensures that failures remain localized and do not cascade across the entire system.

From a system design lens, this is a textbook example of blast radius reduction.

How S3 Stores Data Internally

When you upload an object to S3, several things happen behind the scenes:

The object is split into chunks

Chunks are distributed across multiple storage nodes

Metadata is stored separately from data

S3 does not rely on a single copy. Instead, it uses redundancy across multiple devices and facilities.

This separation of data and metadata allows independent scaling and recovery.

Replication and the 11 Nines of Durability

S3 famously advertises 99.999999999% durability.

This is achieved through aggressive replication across multiple Availability Zones.

Data is stored in at least 3 AZs

Each AZ has independent power, cooling, and networking

Failures are assumed, not treated as exceptions

Instead of preventing failures, S3 is designed to continuously repair itself.

Background processes constantly verify data integrity and recreate lost replicas.

Consistency Model: From Eventual to Strong

Historically, S3 offered eventual consistency for overwrites and deletes.

Today, S3 provides strong read-after-write consistency for all operations.

This shift required deep architectural changes:

Versioned metadata updates

Carefully ordered replication

Distributed consensus within cells

The key takeaway: strong consistency at scale is possible, but only with careful partitioning and failure isolation.

Why S3 Is Fast (Even Though It’s Distributed)

S3 is optimized for throughput, not single-request latency.

Several design choices contribute to its performance:

Massive internal parallelism

Multi-part uploads and downloads

Stateless request handling

Clients are encouraged to upload large objects in parts, allowing S3 to distribute work across many nodes simultaneously.

Performance comes from scale, not from a single fast disk.

Metadata at Scale

Metadata is often the real bottleneck in storage systems.

S3 treats metadata as first-class data, storing it in highly available, partitioned systems.

Object size

Checksum

Version ID

ACLs and policies

By decoupling metadata from object payloads, S3 can handle high request rates without touching the actual data.

Security, Isolation, and Access Control

S3 security is enforced at multiple layers:

IAM policies

Bucket policies

Object-level ACLs

Every request is authenticated and authorized before it reaches the storage layer.

This makes S3 suitable as a shared multi-tenant system without cross-account data leakage.

Lifecycle Management and Tiered Storage

S3 is not just one storage class.

It is a family of storage tiers optimized for different access patterns:

Standard

Intelligent-Tiering

Infrequent Access

Glacier

Lifecycle rules allow objects to move automatically between tiers.

This is a classic example of policy-driven system behavior replacing manual operations.

Failure Handling as a First-Class Feature

In S3, disks fail. Machines fail. Entire AZs can fail.

The system assumes this as the default state.

Heartbeat-based failure detection

Background replication repair

No human intervention required

Durability is achieved not by perfect hardware, but by continuous verification and repair.

System Design Lessons from S3

S3 teaches several timeless system design principles:

Simplicity at the interface, complexity underneath

Partition everything

Assume failure and automate recovery

Optimize for scale, not edge cases

These ideas apply far beyond storage systems.

Closing Thought

S3 is not just a storage service—it is a masterclass in distributed system design.

Its success comes from making the hard problems invisible to users while solving them rigorously underneath.

If you want to design systems that scale to millions of users and petabytes of data, understanding S3 is a great place to start.