Making Voice AI Truly Real-Time: Reducing Latency in Production Systems

Making Voice AI Truly Real-Time: Reducing Latency in Production Systems

Short description:

Voice AI is booming. From customer support to personal assistants, everyone is racing to build conversational systems. In India alone, public demos and debates—like the Bluemachine AI discussion with Arnab—have pushed Voice AI into mainstream attention. But while demos look impressive, building a Voice AI system that feels truly real-time in production is a very different challenge.

This post takes a deep, system-level look at where latency actually comes from in Voice AI pipelines, why most early implementations feel sluggish, and how production systems reduce end-to-end delay without sacrificing reliability.

Why Real-Time Voice AI Is Harder Than It Looks

Voice interaction has an extremely low tolerance for delay. Humans are conditioned to expect near-instant responses during spoken conversations.

Even a pause of a few hundred milliseconds feels unnatural. A full second of delay feels broken.

This makes Voice AI fundamentally different from chat-based systems. In chat, latency is expected. In voice, latency breaks immersion.

The challenge is not making individual components fast. The challenge is ensuring that the entire system behaves like a single, responsive organism.

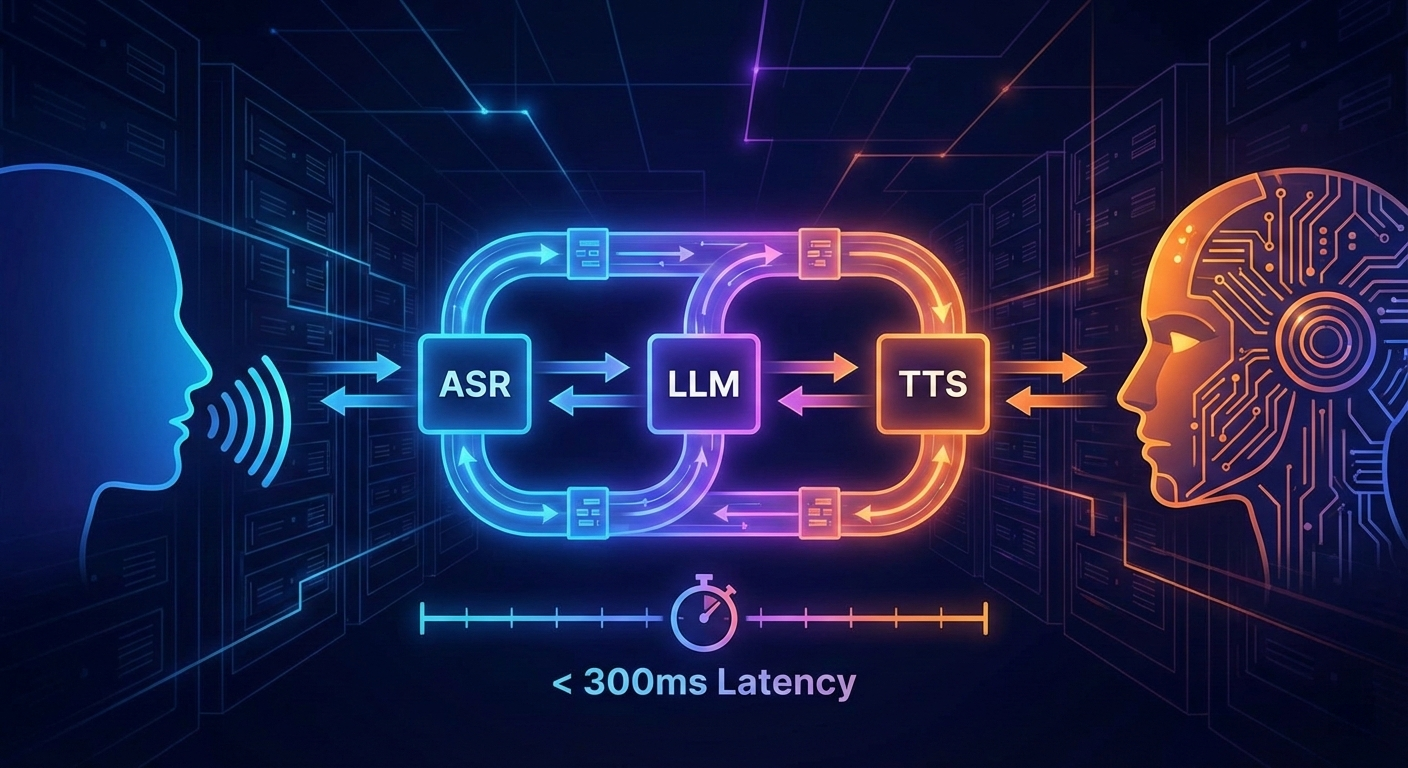

The End-to-End Voice AI Pipeline

Most production Voice AI systems follow a similar high-level architecture, regardless of vendor or framework.

User Speech

|

v

[ Microphone / Client SDK ]

|

v

[ Streaming Audio Transport ]

|

v

[ Speech-to-Text (ASR) ]

|

v

[ Language Model / Reasoning ]

|

v

[ Text-to-Speech (TTS) ]

|

v

[ Audio Playback ]

Each stage introduces latency, variability, and potential failure modes.

Optimizing one stage in isolation rarely helps. Latency accumulates across boundaries.

Why Streaming Is Non-Negotiable

The most important architectural decision in Voice AI is whether the system is streaming-first or batch-based.

Batch systems wait until the user finishes speaking, then process everything at once. This approach is simpler to implement but fundamentally incompatible with real-time interaction.

Streaming systems process audio incrementally as it arrives.

Audio Frames ---> ASR (Partial Results)

|

v

Incremental Transcript

|

v

Early LLM Tokens

|

v

Streaming TTS Playback

Streaming reduces perceived latency even if total processing time remains unchanged. Users hear responses sooner, which matters more than total compute time.

In practice, streaming is the difference between a system that feels conversational and one that feels robotic.

Speech-to-Text: Latency Starts Here

Speech-to-text is often the first place where latency accumulates.

High-accuracy ASR models are computationally expensive. Low-latency models trade accuracy for speed. In production, teams rarely choose one extreme.

Instead, they apply layered strategies:

Use streaming ASR instead of full transcription

Accept partial transcripts early

Refine accuracy asynchronously if needed

Another critical factor is deployment topology.

Running ASR in a distant region can add tens or hundreds of milliseconds due to network round trips alone. Mature systems push ASR as close to the user as possible.

In real-world usage, users tolerate minor transcription errors far more than they tolerate silence.

LLMs: Where Latency Is Usually Self-Inflicted

LLMs are often blamed for slow Voice AI systems, but the bottleneck is rarely raw model speed.

The real issue is how models are used.

Large prompts, unnecessary context, and waiting for full responses introduce avoidable delays.

Production systems optimize LLM usage aggressively:

Context trimming and prompt minimization

Streaming token output instead of full completions

Early intent classification before deep reasoning

In many cases, the system does not need a perfect response. It needs the next meaningful response.

This mindset shift alone often cuts perceived latency dramatically.

Text-to-Speech: Where Silence Becomes Obvious

Text-to-speech is the final stage, and the most visible to users.

If audio playback does not begin quickly, the entire system feels broken—regardless of how fast earlier stages were.

Modern systems stream TTS output incrementally:

LLM Tokens

|

v

Text Chunks

|

v

Audio Chunks

|

v

Immediate Playback

This allows speech to begin while the model is still generating text.

Production teams also avoid cold starts by keeping voice models warm in memory. Cold-starting a TTS model during a conversation is almost always unacceptable.

Infrastructure Is Often the Real Bottleneck

Once models are optimized, infrastructure becomes the dominant source of latency.

Cross-region calls, cold containers, overloaded gateways, and connection setup times all add unpredictable delays.

Well-designed systems reduce this by:

Co-locating ASR, LLM, and TTS services

Using persistent connections such as WebSockets or WebRTC

Avoiding deep synchronous call chains

In real-time Voice AI, every network hop matters.

Latency Budgets: How Production Teams Think

Mature Voice AI teams assign explicit latency budgets to each stage of the pipeline.

Target End-to-End Latency: ~300ms

--------------------------------

ASR Processing : 80ms

LLM Inference : 120ms

TTS Generation : 70ms

Network Overhead : 30ms

When a component exceeds its budget, the system degrades gracefully instead of blocking.

This forces teams to make trade-offs early rather than discovering bottlenecks in production.

What Actually Works in Production

Despite different tools and vendors, production Voice AI systems tend to converge on similar principles.

Streaming-first architecture

Strict timeouts and backpressure

Observability across every stage

Most importantly, latency is treated as a product feature, not an engineering afterthought.

Teams that succeed optimize for perceived responsiveness, not theoretical correctness.

How Production Systems Actually Reduce Latency

At scale, latency is not solved by a single optimization. It is solved by making latency a first-class constraint across architecture, infrastructure, and product decisions.

The solutions below are patterns that repeatedly show up in real-world Voice AI systems that feel genuinely conversational under load.

1. Treat Streaming as a Hard Requirement, Not an Optimization

Many systems claim to be streaming, but only stream at one or two layers. This creates hidden buffering points that reintroduce latency.

Production systems enforce streaming at every boundary:

Client → Backend (audio frames, not files)

ASR → Orchestrator (partial transcripts)

LLM → TTS (token-level output)

TTS → Client (audio chunks)

If any layer waits for completion, the entire pipeline slows down.

Streaming must be treated as a design invariant, not a performance tweak added later.

2. Introduce Explicit Latency Budgets Per Component

One of the biggest mindset shifts in mature systems is assigning explicit latency budgets.

Instead of asking “why is this slow?”, teams ask “who is overspending latency?”.

A typical production budget looks like:

End-to-End Target: 250–350ms

ASR : 70–90ms

LLM : 100–140ms

TTS : 60–80ms

Networking : 20–40ms

When a component exceeds its budget, the system degrades gracefully instead of blocking.

This forces realistic trade-offs early, before traffic exposes weaknesses.

3. Decouple Conversation Flow from Heavy Computation

A common mistake is tying conversational flow directly to expensive operations.

Production systems separate “keeping the conversation alive” from “doing heavy work”.

Examples include:

Acknowledging intent before full reasoning completes

Responding with short confirmations while background processing continues

Deferring non-critical enrichment to async workflows

This prevents the user experience from being hostage to the slowest operation.

4. Use Early Intent Detection Instead of Full Reasoning

Most voice interactions do not require full LLM reasoning upfront.

Production systems often run a fast, lightweight intent classifier before invoking deeper reasoning.

This allows the system to:

Route requests to specialized handlers

Trigger canned or partial responses early

Skip expensive prompts when unnecessary

Deep reasoning is reserved for cases where it actually adds value.

5. Keep Models Warm and State Close

Cold starts are catastrophic for Voice AI.

Successful systems aggressively avoid them:

ASR and TTS models are kept resident in memory

LLM connections are pooled and reused

Containers are pre-warmed during low traffic

In real-time systems, saving 50ms matters more than saving compute cost.

6. Collapse Network Hops Aggressively

Every network hop adds latency and variance.

Production Voice AI systems minimize hops by:

Co-locating ASR, LLM, and TTS services

Avoiding synchronous calls across regions

Using in-process orchestration where possible

Even “fast” internal APIs become bottlenecks when chained.

7. Prefer Persistent Connections Over Request-Based Protocols

Connection setup time is often underestimated.

Systems that rely on short-lived HTTP requests repeatedly pay this cost.

Production systems favor:

WebSockets for long-lived audio streams

WebRTC for low-latency, bidirectional media

Connection reuse wherever possible

This alone can shave tens of milliseconds from every interaction.

8. Design for Partial Failure, Not Perfect Execution

In real-time Voice AI, things will fail mid-conversation.

Instead of blocking, systems are designed to degrade gracefully:

If ASR confidence drops, ask clarifying questions

If LLM slows down, respond with partial confirmations

If TTS fails, fall back to simpler voices

Maintaining conversational flow is more important than perfect output.

9. Measure What Users Actually Feel

Internal metrics often lie.

Production teams measure latency the way users perceive it:

Time to first audio response

Silence duration after user stops speaking

Conversation interruption frequency

Optimizing these metrics leads to better user experience than raw component timings.

10. Accept That Trade-offs Are Permanent

There is no perfect Voice AI system.

Every production deployment makes deliberate compromises between accuracy, cost, and responsiveness.

The teams that succeed acknowledge this early and design systems that fail softly instead of breaking hard.

Closing Thought

Voice AI feels magical only when it responds instantly.

Reducing latency is not about one clever trick. It’s about disciplined system design, realistic trade-offs, and accepting that responsiveness matters more than perfection.

The systems that get this right don’t just generate speech. They create conversations that feel alive.